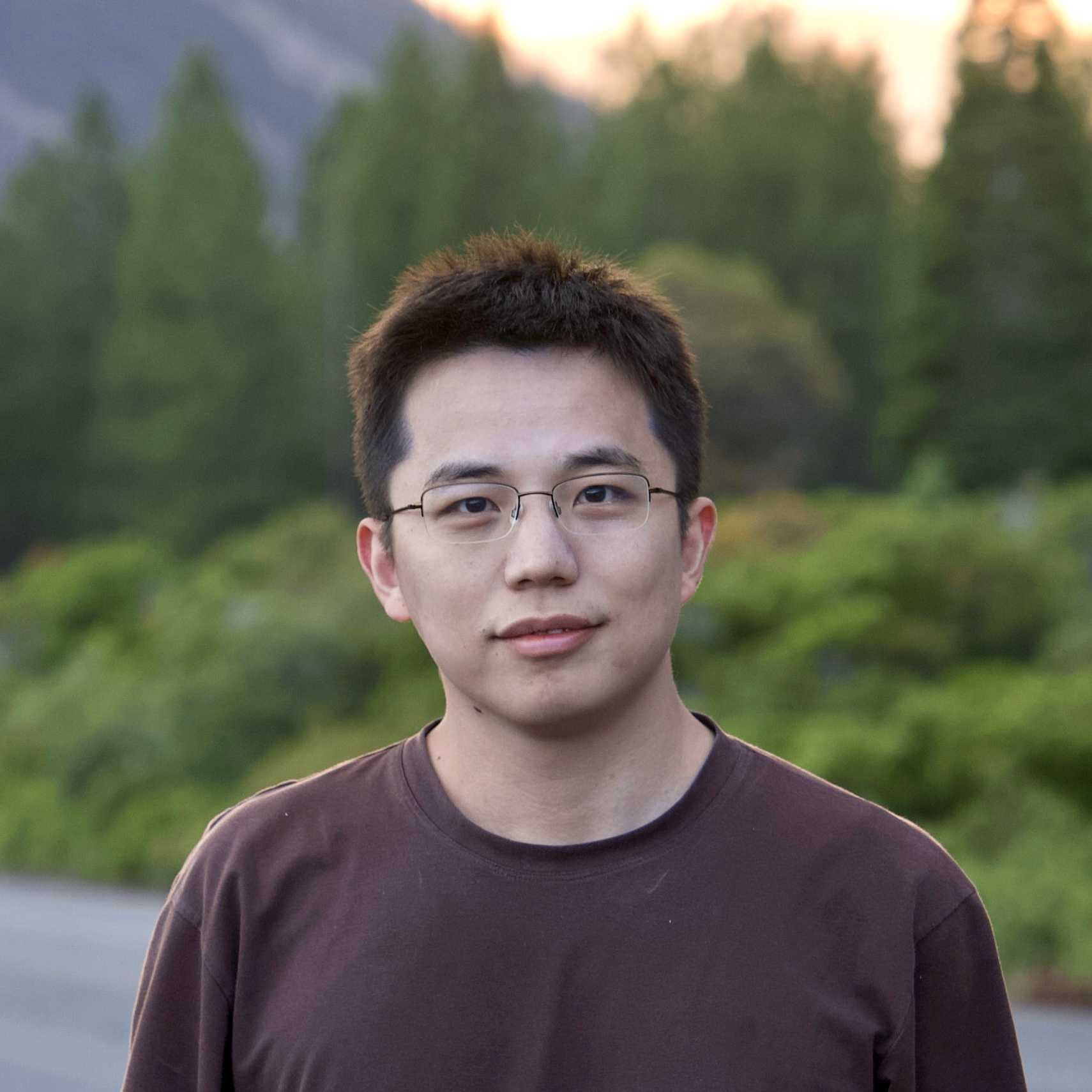

Hao Liu

I am a research scientist at Google DeepMind and an incoming Assistant Professor of Machine Learning at

Carnegie Mellon University.

I am a research scientist at Google DeepMind and an incoming Assistant Professor of Machine Learning at

Carnegie Mellon University.

Previously, I completed my Ph.D. in Computer Science at Berkeley, during which I spent two years part-time at Google on the Google Brain team.

My research interests focus on solving intelligence by addressing problems in deep learning, neural networks, and learning objectives.